|

I am a Ph.D. student at The Chinese University of Hong Kong (CUHK), advised by Prof. Yixuan Yuan. I received M. Eng degree from Xiamen University, advised by Prof. Xinghao Ding and Prof. Yue Huang. Prior to that, I earned the B. Eng degree from Xiamen University. Enthusiastically, I am dedicated to developing creative, efficient and responsible multi-modal and 3D vision algorithms that enables our perceiving, simulating and manipulating the real-world scenarios that we live in and interact with. My north star is to foster real-world intelligent machine and contribute to scientific innovation such as in biomedicine. Email / CV / Google Scholar / Github / Twitter |

|

|

|

|

|

|

|

Chenxin Li*, Hengyu Liu*, Yifan Liu*, Brandon Y. Feng, Wuyang Li, Xinyu Liu, Zhen Chen, Jing Shao, Yixuan Yuan (* Equal Contribution) Preprint, 2024 [Project] [ArXiv] [Video] [Code] A pioneering exploration into high-fidelity medical video generation on endoscopy scenes |

|

|

Yifan Liu*, Chenxin Li*, Chen Yang, Yixuan Yuan (* Equal Contribution) Preprint, 2024 [Project] [ArXiv] [Video] [Code] Real-time surgical reconstruction with Gaussian Splatting representation |

|

|

Chenxin Li*, Brandon Y. Feng*, Zhiwen Fan*, Panwang Pan, Zhangyang Wang (* Equal Contribution) International Conference on Computer Vision (ICCV), 2023 [Project] [ArXiv] [Video] [Code] NeRF with multi-modal IP information instillation |

|

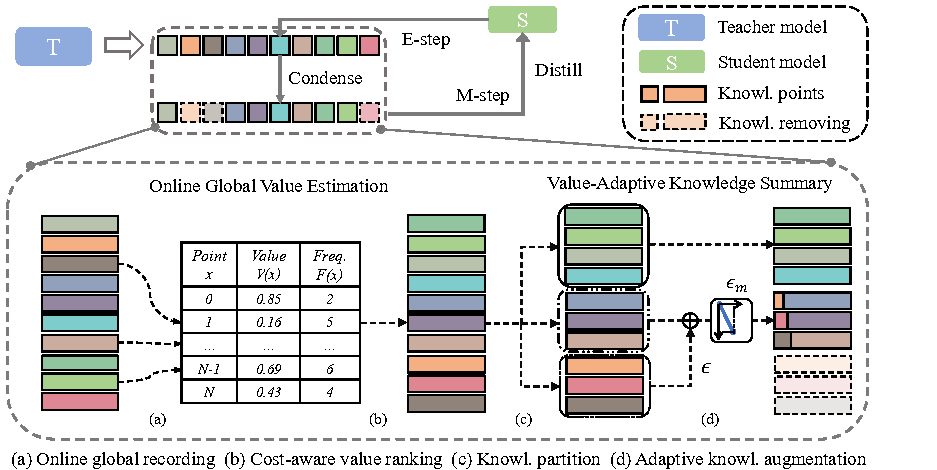

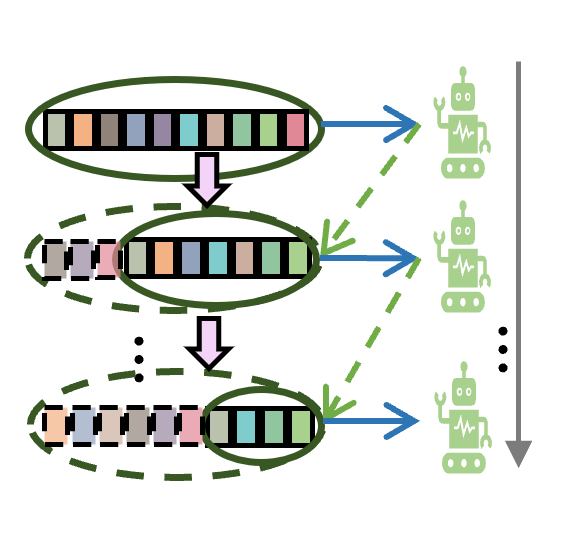

Chenxin Li, Mingbao Lin, Zhiyuan Ding, Nie Lin, Yihong Zhuang, Xinghao Ding, Yue Huang, Liujuan Cao European Conference on Computer Vision (ECCV), 2022 [PDF] [Supp] [ArXiv] [Code] Co-design of dataset and model distillation |

|

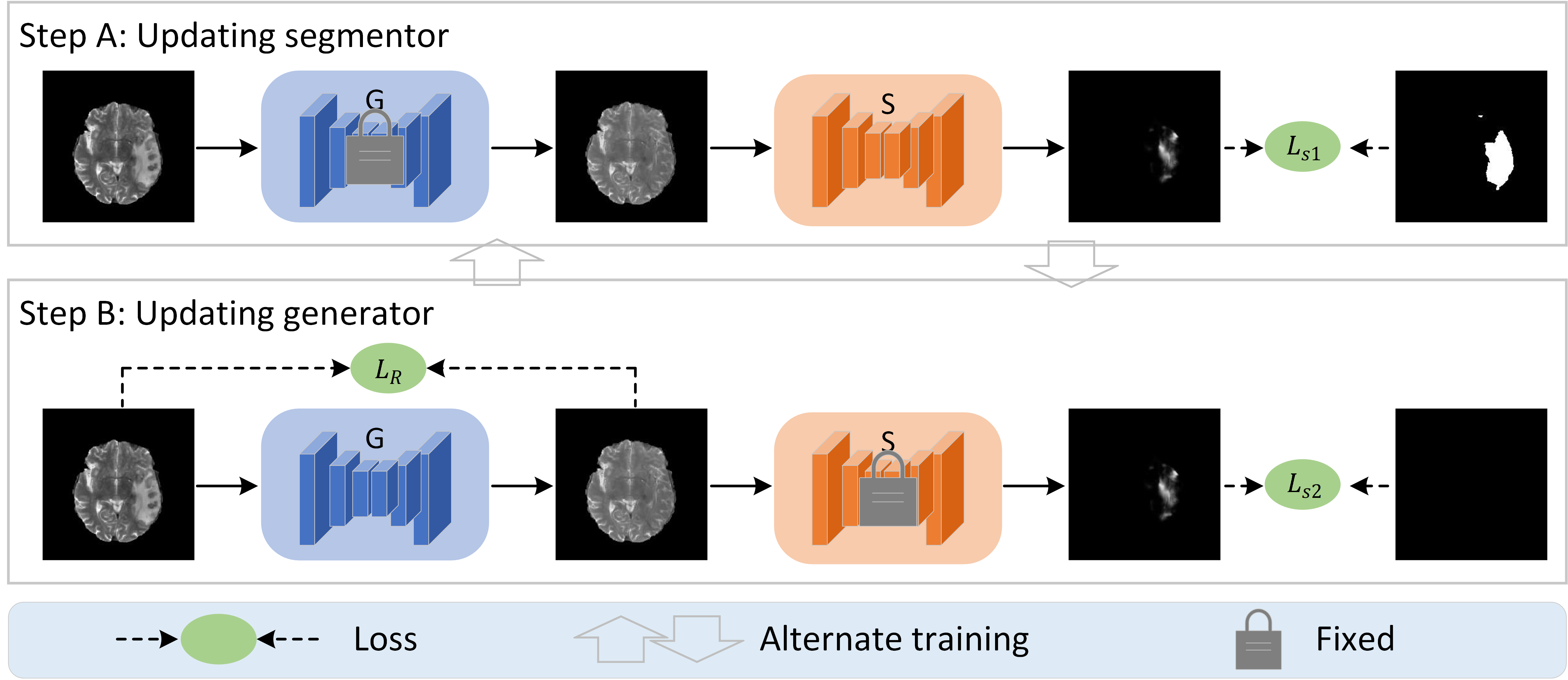

Yunlong Zhang*, Chenxin Li*, Xin Lin, Liyan Sun, Yihong Zhuang, Xinghao Ding, Yue Huang, Yizhou Yu (* Equal Contribution) International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI), 2021 [PDF] [ArXiv] [Code] Generative AI for lesion-centric synthesis |

|

|

-

Cas6D: Learning to Estimate 6DoF Pose from Limited Data: A Few-Shot, Generalizable Approach using RGB Images

Panwang Pan*, Zhiwen Fan*, Brandon Y. Feng, Peihao Wang, Chenxin Li , Zhangyang Wang (* Equal Contribution)

International Conference on 3D Vision (3DV), 2024

[ArXiv] [code] -

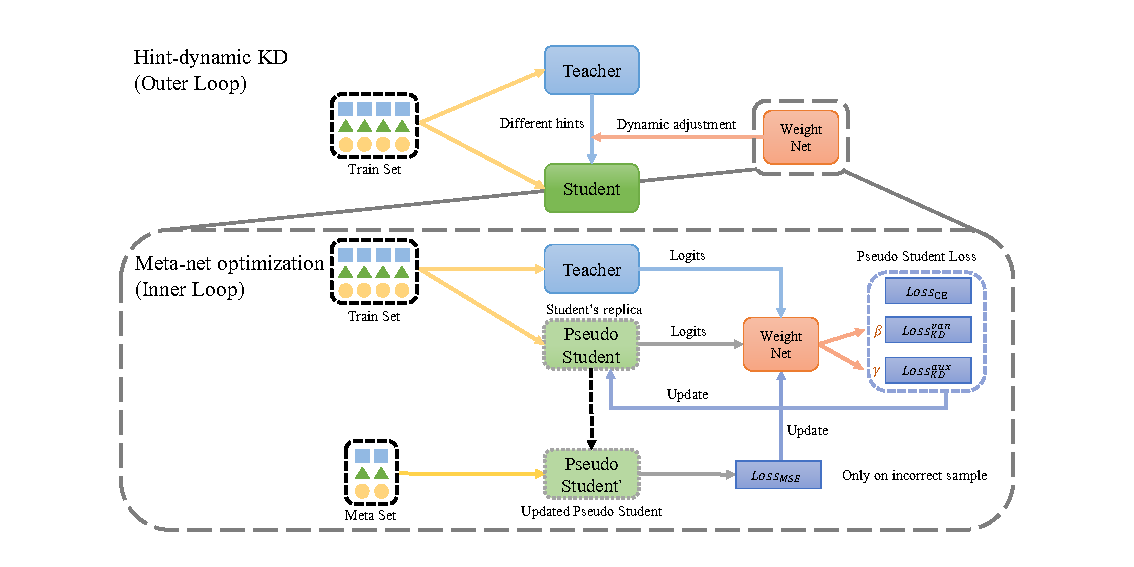

Hint-Dynamic Knowledge Distillation

Yiyang Liu*, Chenxin Li* , Xiaotong Tu, Xinghao Ding, Yue Huang (* Equal Contribution)

IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2023

[PDF] [ArXiv] -

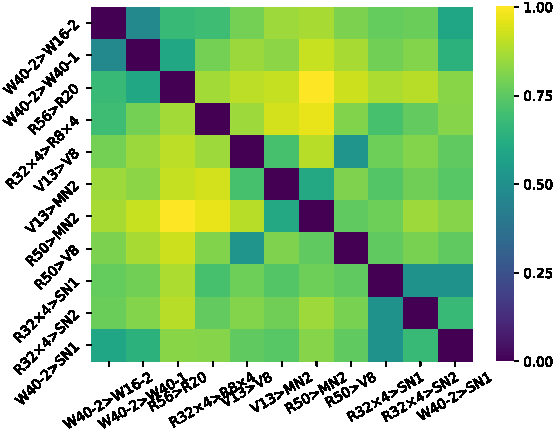

Consistent Posterior Distributions under Vessel-Mixing: A Regularization for Cross-Domain Retinal Artery/Vein Classification

Chenxin Li, Yunlong Zhang, Zhehan Liang, Xinghao Ding, Yue Huang

IEEE International Conference on Image Processing (ICIP), 2021

[PDF] [ArXiv] -

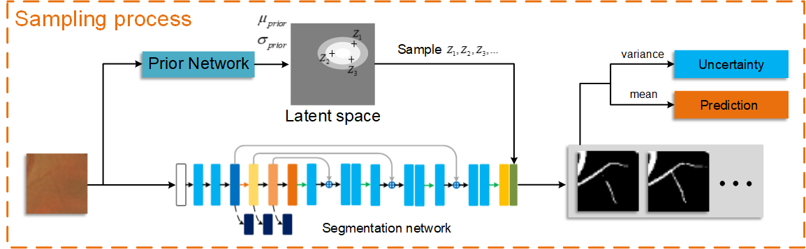

Hierarchical Deep Network with Uncertainty-aware Semi-supervised Learning for Vessel Segmentation

Chenxin Li, Wenao Ma, Liyan Sun, Xinghao Ding, Yue Huang, Guisheng Wang, Yizhou Yu

Neural Computing and Applications (NCA), 2021

[PDF] [ArXiv] [Code] -

Domain Generalization on Medical Imaging Classification using Episodic Training with Task Augmentation

Chenxin Li, Qi Qi, Xinghao Ding, Yue Huang, Dong Liang, Yizhou Yu

Computers in Biology and Medicine (CBM), 2021

[PDF] [ArXiv] [Code] -

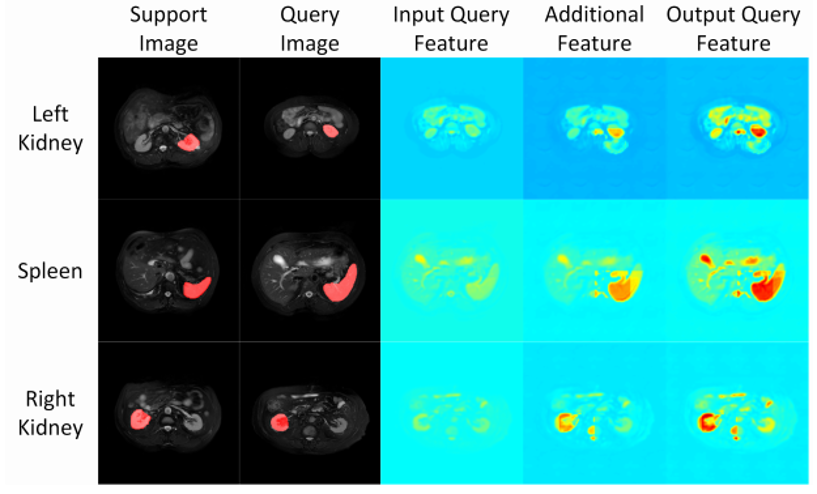

Few-shot Medical Image Segmentation using a Global Correlation Network with Discriminative Embedding

Liyan Sun*, Chenxin Li*, Xinghao Ding, Yue Huang, Guisheng Wang, Yizhou Yu, John Paisley (* Equal Contribution)

Computers in Biology and Medicine (CBM), 2021

[PDF] [ArXiv] [Code]

|

|

Conference Reviewer

Journal Reviewer

|